The Cerebras AI 250M Series 4B is a supercomputer in a single chip, designed to deliver unparalleled performance for AI workloads. With a staggering 2.6 trillion transistors, this chip is the largest ever built, providing an enormous amount of computational power. Its massive size enables it to tackle complex AI tasks that were previously unattainable, making it a game-changer in the field.

This revolutionary chip boasts an impressive 850,000 AI-optimized cores, each capable of running multiple concurrent threads. This parallel processing capability allows for lightning-fast execution of AI algorithms, significantly reducing training and inference times. With the Cerebras AI 250M Series 4B, researchers and developers can now tackle larger and more complex AI models with ease.

Efficient and Scalable Architecture

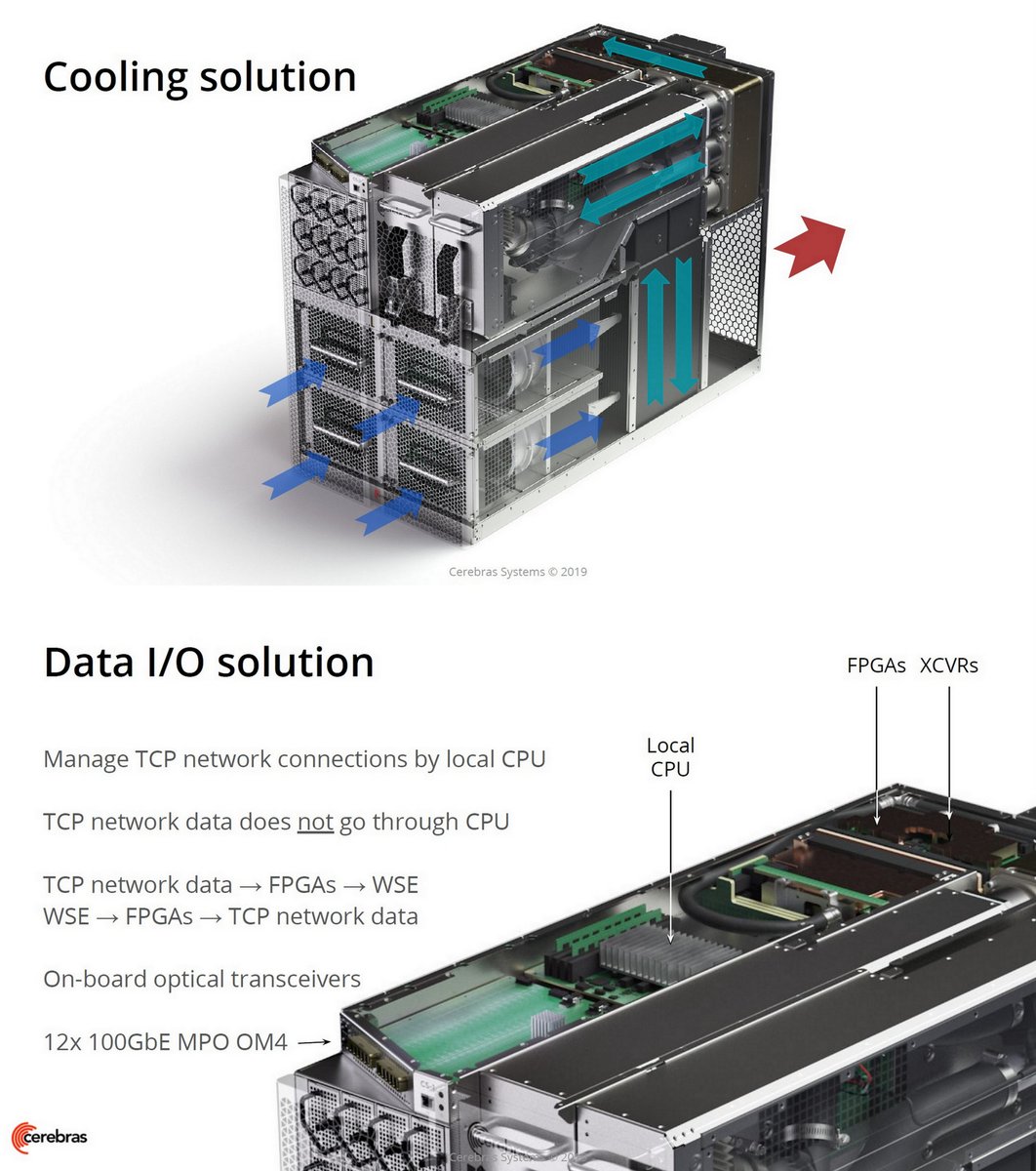

The Cerebras AI 250M Series 4B is built on a unique architecture that maximizes efficiency and scalability. Unlike traditional GPU-based systems that rely on multiple chips interconnected through complex networks, this chip integrates all the necessary components onto a single wafer-scale design. This eliminates the need for data movement between chips, reducing latency and energy consumption.

Furthermore, the Cerebras AI 250M Series 4B features a sophisticated on-chip memory system that minimizes data movement and maximizes data locality. This allows for faster access to data, further enhancing performance. The chip’s architecture also enables easy scalability, as multiple chips can be seamlessly interconnected to create even more powerful AI systems.

Enhanced AI Model Training

Training AI models is a computationally intensive task that requires significant resources. The Cerebras AI 250M Series 4B excels in this area, enabling researchers and data scientists to train complex models faster than ever before. Its massive parallel processing capability, combined with the on-chip memory system, allows for efficient training of large-scale models.

Moreover, the Cerebras AI 250M Series 4B supports mixed-precision training, a technique that combines high-precision arithmetic with lower-precision calculations. This not only accelerates training but also reduces memory requirements, enabling users to train larger models with limited resources. The chip’s ability to handle mixed-precision training makes it an ideal choice for AI research and development.

Accelerating Inference Workloads

In addition to training, the Cerebras AI 250M Series 4B excels in running inference workloads. Inference is the process of using a trained AI model to make predictions or decisions based on new data. With its massive parallelism and low-latency design, this chip can perform inference tasks at lightning speed, enabling real-time decision-making in various applications.

The Cerebras AI 250M Series 4B also supports model compression techniques, which reduce the size of trained models without compromising accuracy. This allows for faster inference and reduces memory requirements, making it ideal for deployment in edge devices with limited resources. Whether it’s autonomous vehicles, healthcare systems, or smart cities, the Cerebras AI 250M Series 4B empowers AI applications to deliver real-time insights and actions.

Conclusion:

The Cerebras AI 250M Series 4B is a groundbreaking technology that pushes the boundaries of AI computing. With its massive computational power, efficient architecture, and support for advanced training and inference techniques, this chip is revolutionizing the AI landscape. Researchers, data scientists, and developers can now tackle larger and more complex AI models, accelerating innovation in various industries. As AI continues to evolve, the Cerebras AI 250M Series 4B stands at the forefront, driving the next wave of AI advancements.